Random Forest Classification

Random forests or random decision forests are an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time. For classification tasks, the output of the random forest is the class selected by most trees. For regression tasks, the mean or average prediction of the individual trees is returned. Random decision forests correct for decision trees' habit of overfitting to their training set. Random forests generally outperform decision trees, but their accuracy is lower than gradient boosted trees. However, data characteristics can affect their performance.

For our project, we will use a Random Forest Classifier.

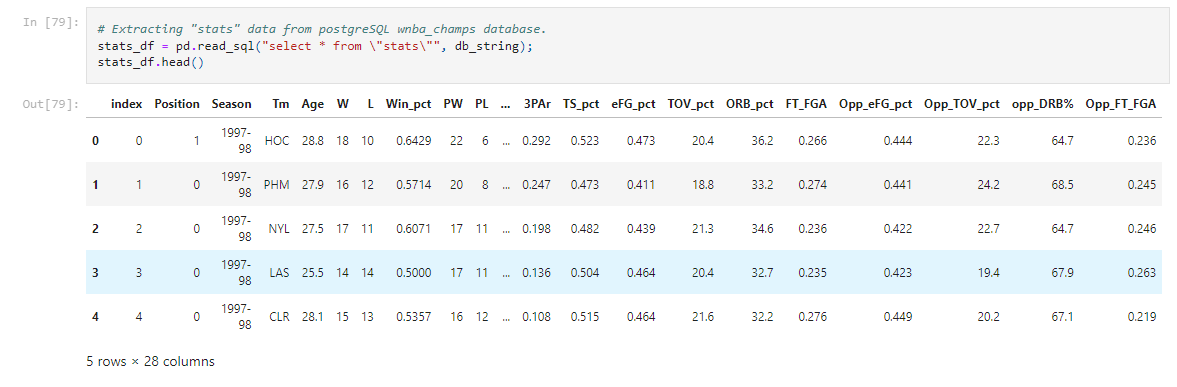

After cleaning is done to the datasets, they are both inserted into a PostgreSQL database. From there, we import them into our Model for training.

After cleaning is done to the datasets, they are both inserted into a PostgreSQL database. From there, we import them into our Model for training.

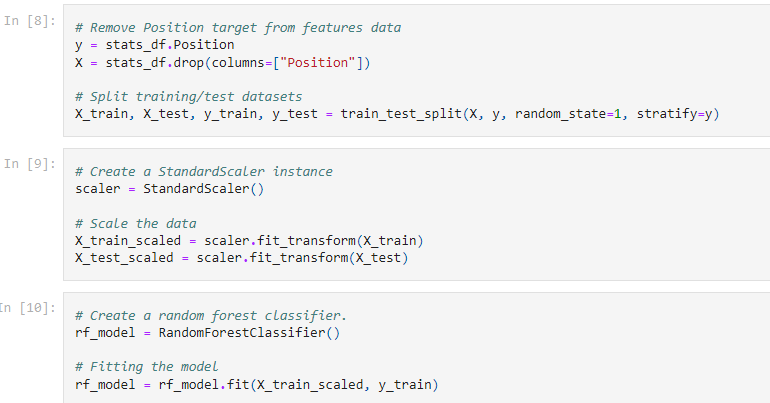

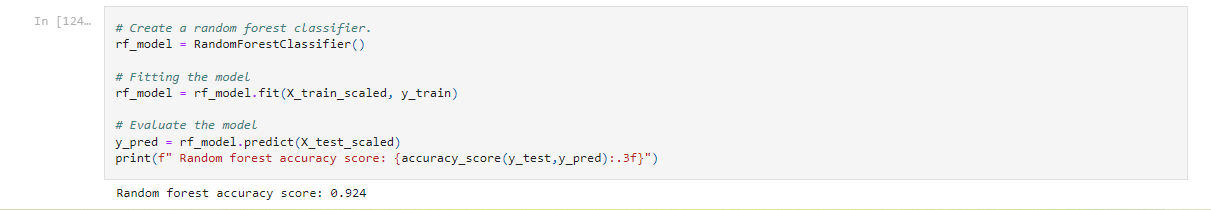

This model is set up the same as the Logistic Regression and Decision Tree model. Unneeded features are dropped, the 'Position' feature is set as the target, and the remaining features are assigned to the variable X. Then we will split the data into 4 groups, used to train and test the model.

The Random Forest model is a type of ensemble algorithm that builds a 'forest' of decisions trees, trained with the 'bagging' method. This method takes multiple samples of the training data and constructs models for each data sample, and each models predictions are averaged to give a better estimate of the output value.

The Random Forest model is a type of ensemble algorithm that builds a 'forest' of decisions trees, trained with the 'bagging' method. This method takes multiple samples of the training data and constructs models for each data sample, and each models predictions are averaged to give a better estimate of the output value.

We can find the accuracy score by passing the test sets through the model. This shows that by training our model with X_train and y_train, it is 92.4% accurate at choosing a correct outcome for the target 'Position'.

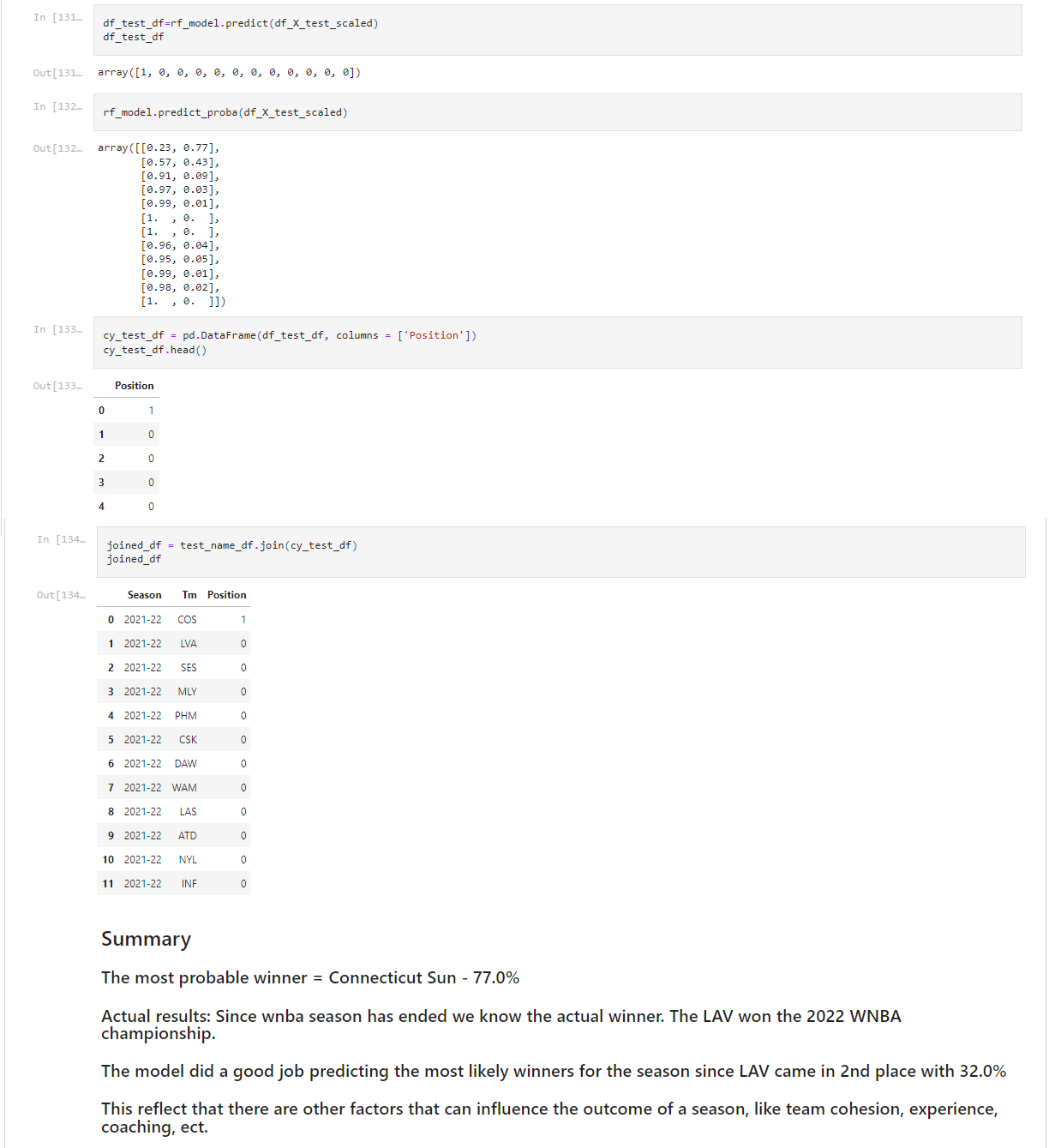

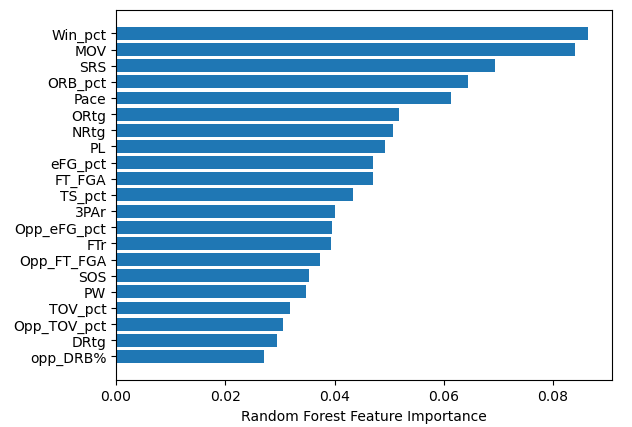

Defining feature importance was more of a challenge with the Random Forest model. The ranking of the features changed each time the model was reloaded and ran again. The top 3 features did stay consistent during each reload so we chose to remove the remaining features. Upon testing the accuracy score after the features were removed, it showed to drop the accuracy slightly. We then chose to include all features to give the model more data to train and 'learn' from. Once the team names and season features are set into their own dataframe, our model is ready for the current year stats to be passed through and give us a prediction. As with the Logistic and Decision models, the prediction outcome array is set to a dataframe and joined with the name dataframe.

Once the team names and season features are set into their own dataframe, our model is ready for the current year stats to be passed through and give us a prediction. As with the Logistic and Decision models, the prediction outcome array is set to a dataframe and joined with the name dataframe.